I used this technique to construct a regression, giving a formula for an estimated difficulty measure based on those algorithmically-computable stats. This goes a long way to cutting through the noise of individual skill or situational variation, and pulls out the underlying trend. That large number lets me divide up the moves into buckets that are similar in some traits (like how many constraints were used, of which types, how many possibilities needed to be considered/rejected, etc.), and compute an average deduction time from the hundreds or thousands of moves all matching those traits. I initially thought 5% guess rate would be too high, but after going through the comments folks left on the survey, about 18% of the comments remarked on guessing, or guessing repeatedly, so it's not out of line). (The remaining 5% could be a mix of guesses/faulty reasoning, players repeating parts of the puzzle they'd already solved, but out-of-order, or leaps of reasoning the solver doesn't account for. Then I mapped the actual moves players made (and the time it took them) to those machine deductions - finding a match for 95%, or about 67000 moves. I've used a computer solver to find possible ways of deducing correct moves in each of the 39145 puzzle states a player visited in the data set. This is even more dramatic when we drop down to the granularity of individual moves ( I've added some new slides about that today). 😁 You can see from the charts that even with all the noise in individual ratings, aggregating them to averages still allows some pretty clear trends to emerge, like the tight correlation between the logarithm of time to completion and the average difficulty rating. The main device I'm using to try to combat the confounding factors is the law of large numbers. A hard science approach to this would probably be much more rigorous. As a game designer, my goal isn't necessarily to get definitive proof of a theory of human cognition, but to get some useful heuristics that can guide the design of puzzle creation tools.

You're certainly not wrong that there's a lot of arbitrary steps here. I can't guarantee I'll be able to follow up on every suggestion - it's getting to the heavy end of the semester when everything's due - but I am on the lookout for fruitful new angles to try.

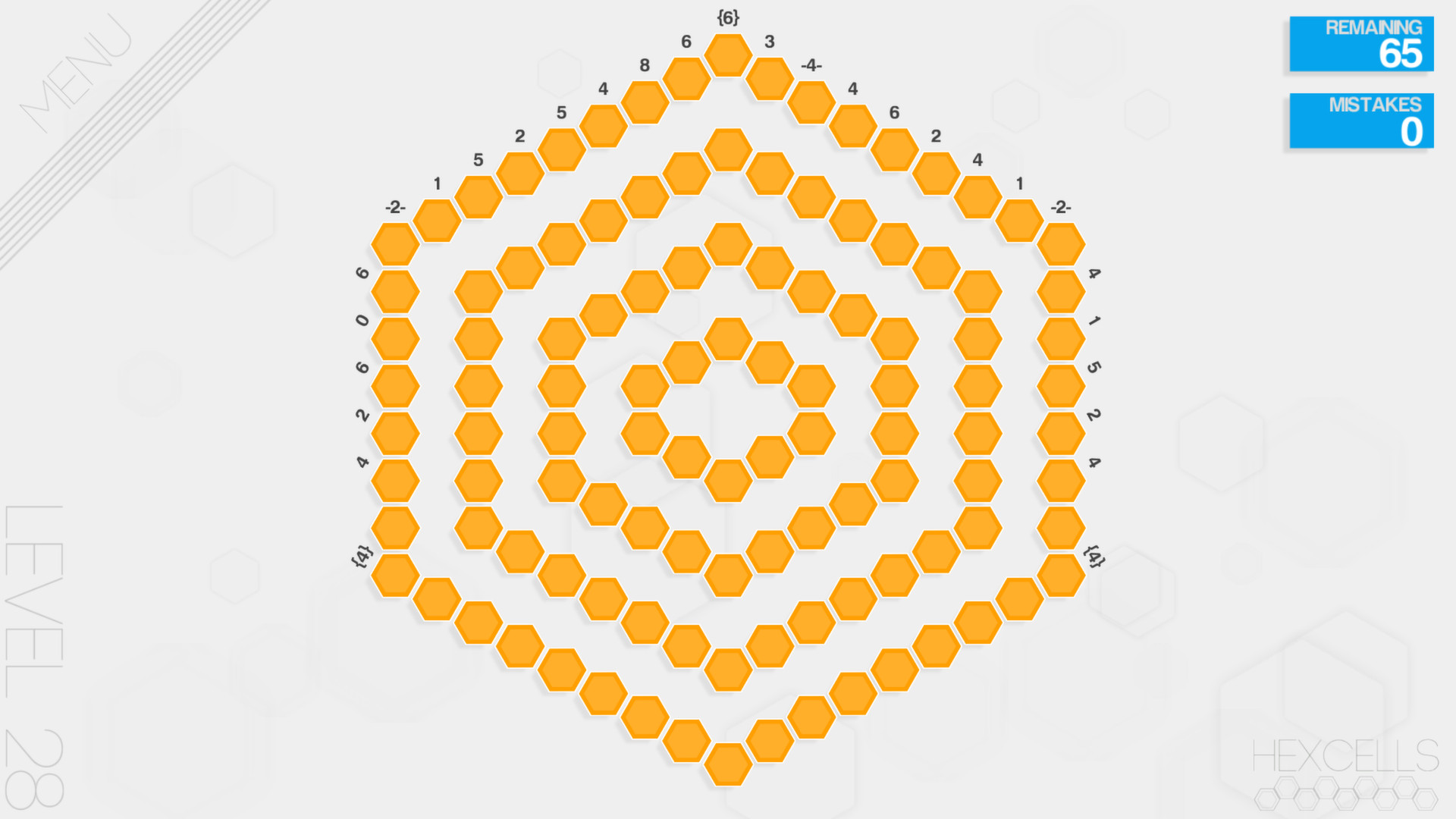

#Reset hexcells free

If time is such a close proxy for perceived difficulty, I can use the time delays between moves to get more granular detail about what types of deduction are more difficult to spot.įeel free to dig through if you're curious, and if you have analyses you're curious about, I'd love to hear your ideas. That bodes well for the next phase of my analysis, where I'll be digging into the difficulty of individual moves. One of the biggest surprises so far is that the average difficulty rating a level got is almost exactly the logarithm of the average time spent solving it (about a 90% match).

Thanks to your help, I was able to collect data on over 1200 puzzle solutions by 96 players, and more than 15 completions of every level in the survey set. (Still a work in progress - I'll be adding more explanations as I refine my final presentation, so apologies for it being mostly just a wall of graph p**n at the moment 😅) Following up on my earlier post about researching how players play and rate Hexcells levels, I wanted to share with the community some of my preliminary data analysis.

0 kommentar(er)

0 kommentar(er)